Did you know that you can pull data from websites into your Google spreadsheet, automatically? There is an incredibly useful function in Google Sheets called IMPORTXML, which you can use to retrieve data from web pages, where that data is pulled into a Google spreadsheet on an automated basis. This is a formula that I use to pull stock / cryptocurrency data with, but you can use it for many different things, from almost any website.

This formula requires a little bit of extra knowledge about web pages and how to find something called, and “XPath”, but I will teach you how to do this in this lesson.

To pull data from a website into a Google spreadsheet, or in other words to web scrape in Google Sheets, follow these steps:

- Type “=IMPORTXML(” or click “Insert” → “Function” → “Web” → “IMPORTXML”

- Copy the URL for the website that you want to pull information from, and paste it into your formula between quotation marks, like this: =IMPORTXML(“https://finance.yahoo.com/quote/TSLA/”

- Type a comma, and then paste the XPath for the element on the web page that you want to pull information from, between quotation marks, like this: =IMPORTXML(“https://finance.yahoo.com/quote/TSLA/”, “/html/body/div[1]/div/div/div[1]/div/div[2]/div/div/div[5]/ div/div/div/div[3]/div[1]/div/fin-streamer[1]”)

- Press “Enter” on the keyboard

See below to learn about XPath, and how to get the XPath of an “element” from a web page so that you can use the IMPORTXML function in Google Sheets.

(The video above is the extended version with lots of detail, you can also find the shortened version below)

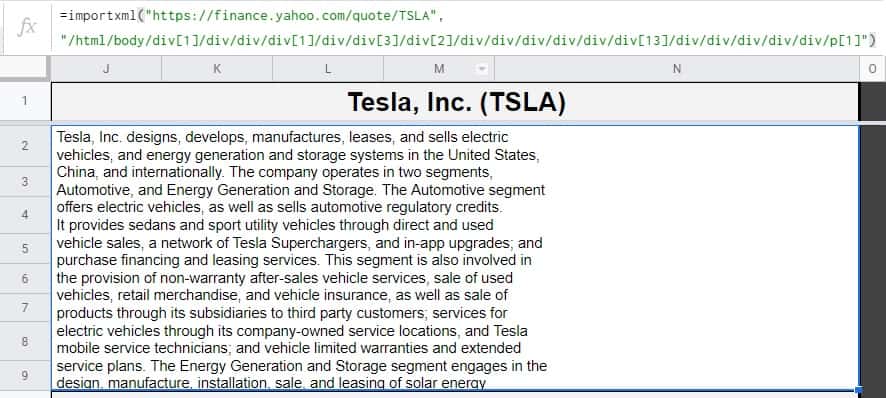

Below is an example of a formula that pulls in the “Business Summary” of the company / stock Tesla (TSLA), into a Google spreadsheet. Further below I will go over an example of how to create this formula, but first there are important things to learn about inspecting website elements and about what XPath is. (Remember that websites often change their code, and so the XPath in the formula examples may be outdated, but you can find the updated versions by following the lesson.

Web scraping Google Sheets formulas (Pull data from a website):

Note that the formulas below are simply examples, and that companies frequently change their websites and so these formulas may not work for you, but you can follow the lesson to learn how to update the proper “XPath” to assure that the formulas work. Note that the formulas on this page may have spaces added into them so that they fit more easily in the pictures and on the web page, but this will not affect the functionality of the formula (So that the formula is shown in multiple lines rather than one very wide string).

Pull data from a website into a Google spreadsheet formula (Business summary example)

- =IMPORTXML(“https://finance.yahoo.com/quote/TSLA/”, “/html/body/div[1]/div/div/div[1]/div/div[3]/div[2]/ div/div/div/div/div/div[13]/div/div/div/div/div/p[1]”)

Pull data from a website into a Google spreadsheet (Stock quote example)

- =IMPORTXML(“https://finance.yahoo.com/quote/TSLA/”, “/html/body/div[1]/div/div/div[1]/div/div[2]/div/div/div[5]/ div/div/div/div[3]/div[1]/div/fin-streamer[1]”)

The Google Sheets IMPORTXML formula:

The IMPORTXML formula is a truly incredible formula that can do lots of very useful things. I personally use this formula to pull data related to stocks into a spreadsheet, in addition to using it to pull cryptocurrency prices.

This is a more advanced function that requires a little bit of knowledge about web page elements, and “XPath”. But don’t worry, I will teach you what you need to know to understand these things, so that you are comfortable using the IMPORTXML formula.

The Google Sheets IMPORTXML function description:

Syntax:

IMPORTXML(url, xpath_query, locale)Formula summary: “Imports data from any of various structured data types including XML, HTML, CSV, TSV, and RSS and ATOM XML feeds.”

The two things needed to use IMPORTXML

There are two important things that we need to use the IMPORTXML formula to pull data from websites. Those two things, are the website URL, and the XPath of the web page element that you are trying to pull data from.

The website URL is simple enough to copy and paste from the top of the web page, but below make sure that you read the part about how to find the XPath of web page elements, and I highly suggest that you watch the video on this lesson to make learning much easier.

#1 Website URL / Web address

The IMPORTXML formula will require the URL of the web page that you are pulling data from, and so note that when we create our formula in the examples below, we will be copying the URL (Website address) from the top of the web page that we are pulling data from, and we will paste it into our formula.

Further in the lesson I will also show you how to combine cell references with the website URL so that you can change the value in a cell, to change the web page that you are pulling data from, very easily.

#2 XPath

“XPath” stands for “XML Path Language”, which is a language frequently used in web scraping. Don’t worry you don’t need to learn this language, you just need to know where to find the code on a web page which I will show you how to do. Just think of the XPath as an address for where something is located in a document or on a web page.

This is the other criteria that is required in the IMPORTXML function, and so we will be copying and pasting the XPath of an element on a web page, and then we will paste that XPath into our Google Sheets formulas. Learn more about this process below.

About XPath and website elements (How to web scrape)

This will be a great lesson for anyone who is just learning to web scrape on any platform. To use the IMPORTXML function, we will need to find the “XPath” of the element that you want to pull data from. This can be done by inspecting an element on a web page.

Each web page consists of many different “elements”. The buttons and the toolbars and the blocks of text etc., are all “elements”. Each element has an XPath, which is a code that can tell the IMPORTXML formula where to pull data from.

To find the XPath of an element on a web page, follow these steps:

- On the desired web page, hover your cursor over the element that you want to pull data from

- Right-click your mouse

- Click “Inspect”

- A bar will pop up on the right, and the code which represents the element that you right-clicked will be highlighted. Right-click on the highlighted code.

- Hover your cursor over “Copy”, and then click “Copy full XPath”

See below for more detailed steps on this process, with images and an example.

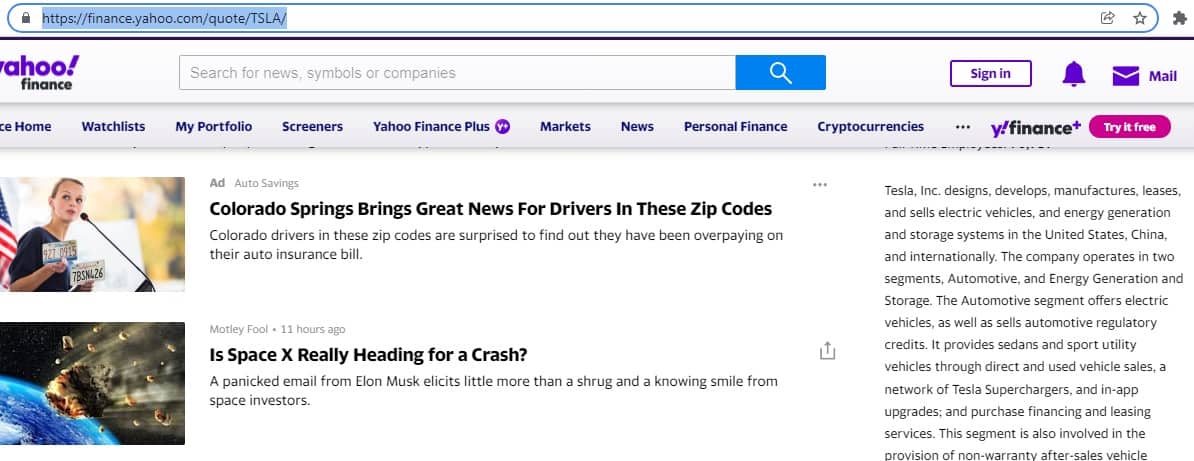

Go to the web page that contains the data that you want to pull with the IMPORTXML function. In this example we are going to the URL: https://finance.yahoo.com/quote/TSLA/

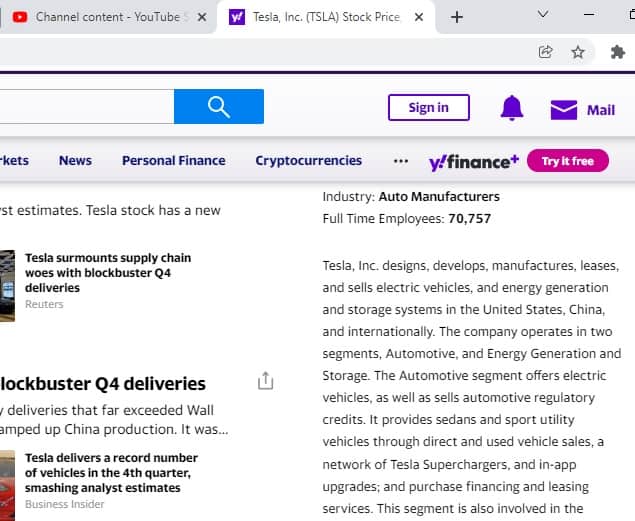

We are going to find / copy the XPath for the element on this page that displays the “Business Summary”, for the company Tesla. This business summary element can be found by scrolling down the screen and looking on the right, as shown in the image below.

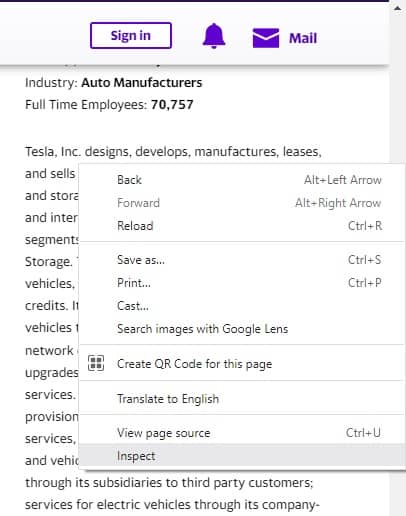

Hover your cursor over the text in the business summary (over the element), and then right-click your mouse. A menu will pop up, and at the bottom of this menu, click “Inspect”.

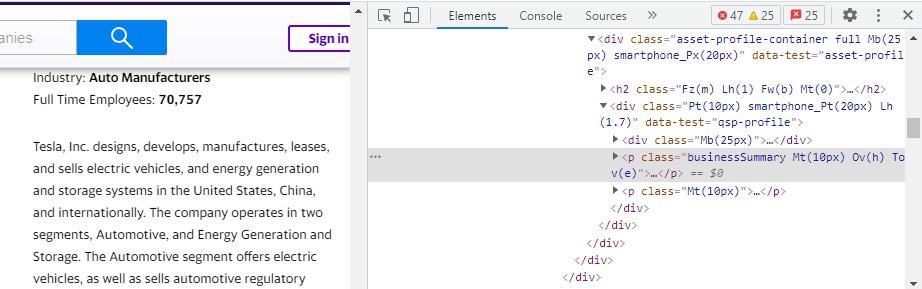

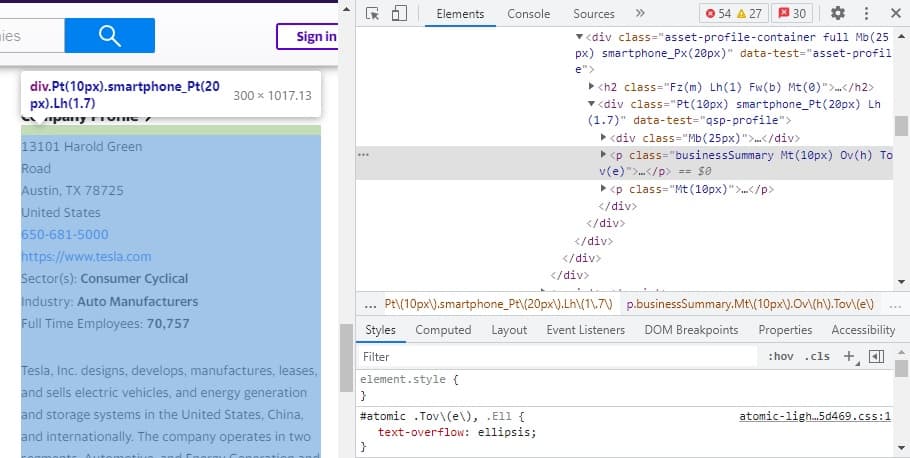

A bar will pop up on the right of your screen after clicking “Inspect”, and some of the code for the website will be displayed. A block of code will be highlighted, and this block represents the element that you right-clicked.

Right-click on the highlighted block of code, hover your cursor over “Copy”, and then click “Copy full XPath”. If you just click “Copy XPath”, this usually works, but sometimes it will include shortened code, and so make sure to copy the full XPath just in case.

Advanced troubleshooting

Sometimes you may have to do a little bit of extra searching to get the correct XPath for the element that you are wanting to pull data from. Sometimes when you right-click on an element, you will not be taken to the exact block of code that you need. Sometimes you might not realize this until you try entering the XPath in your formula. Don’t worry, this is a normal part of web scraping.

Web pages consist of many different sections and containers etc., and often there are elements that are contained within other elements, such as a block of text which is inside of a sidebar. Sometimes when you right-click an element, you might be clicking on a container that holds the element, or a tiny portion of the elements that you want…. but again don’t worry! Google makes it easy to see which element is connected to each portion of code, so that you can find the correct element / XPath for your IMPORTXML formula.

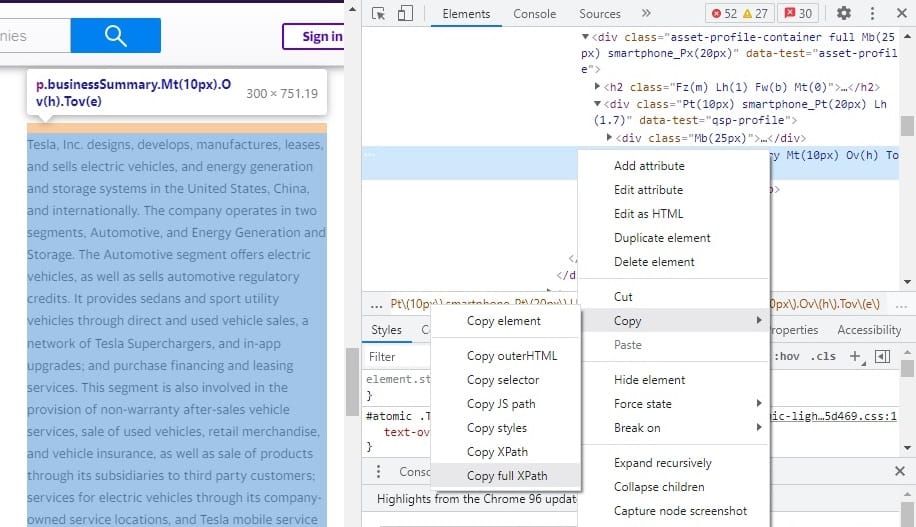

The trick is to hover your cursor over different lines of code, and to look on the left side of your screen (the normal web page) to see which element is highlighted in blue color. (The YouTube video above makes this much easier to see).

If you drag your cursor up / down to hover over different lines of code, the highlighted element on the left will change as you do so, as shown in the images below.

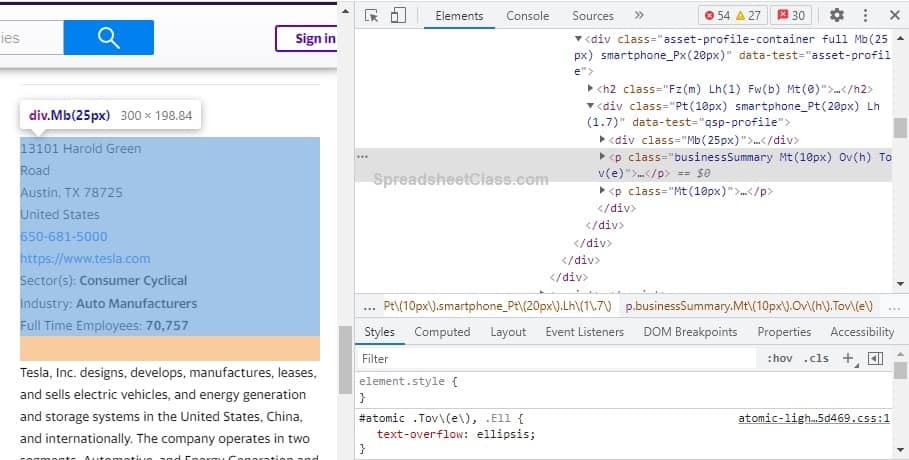

In the image direct below, the cursor is being hovered over the line of code that is directly above the “Business Summary”, where it says “Mb(25px), and as you can see on the left side of the page, the element that is directly above the business summary has been highlighted in blue (The address, phone number etc.).

In the image below, the cursor has been dragged even further up so that it is being hovered over the code that says “smartphone_Pt”, and as you can see in the image this code is a container that holds both the business summary block as well as the address / phone number etc., and so since the cursor is hovered over this code, the entire container / both clocks are highlighted.

So if you right-click an element, and you are not taken to the exact right block of code for the element, you can find the correct one by dragging your cursor up and down, and looking on the left side of the screen as you do so… to make sure that the correct element is highlighted, and therefore so that you copy the XPath of the correct element.

An additional note is to notice that there are small triangles in the code that allow you to expand and collapse sections of code, and sometimes (rare) you may have to click these arrows before dragging your cursor to find the correct element. (Again the YouTube video demonstrates this much better).

But now you know how to find the XPath of an element on a web page, so that you can pull data from the desired part of the website into your Google spreadsheet.

Now let’s go over a couple examples since you know how to get the XPath.

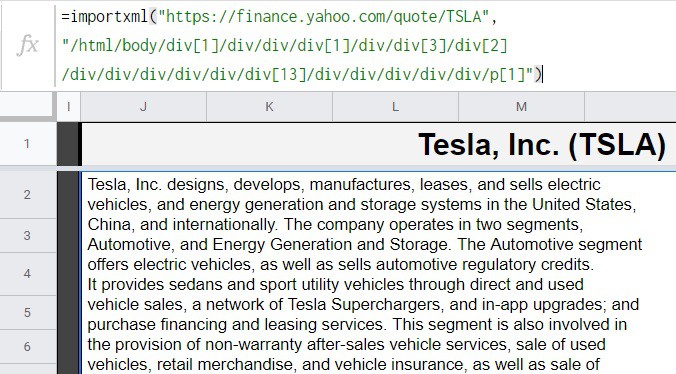

Example 1: Pull the business summary for Tesla (TSLA) from a web page

This example assumes that you have followed the lesson above on how to find XPath. If you do not know how to find the XPath of a web page element, go back and learn that before trying the examples.

The task: Pull the “Business Summary” i.e. the company description for Tesla, from a Yahoo Finance stock quote page, into a Google spreadsheet

The logic: From the URL, https://finance.yahoo.com/quote/TSLA/, pull the data that is contained in the “Business Summary” element into a Google spreadsheet, by referring to the XPath of the business summary element

The formula: The formula below, is entered in cell J2, for this example

=IMPORTXML(“https://finance.yahoo.com/quote/TSLA/”, “/html/body/div[1]/div/div/div[1]/div/div[3]/div[2]/div/div/ div/div/div/div[13]/div/div/div/div/div/p[1]”)

Notice that above the business summary is shown in a spreadsheet cell (The same summary that can be seen in the image below from the Yahoo Finance page)

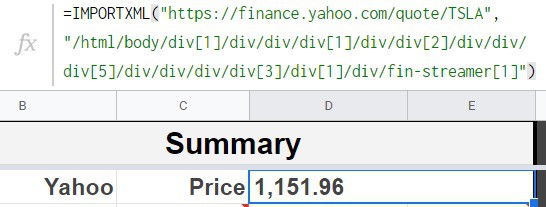

Example 2: Pull the stock price for Tesla (TSLA) from a web page

This example assumes that you have followed the lesson above on how to find XPath. If you do not know how to find the XPath of a web page element, go back and learn that before trying the examples.

The task: Pull the price of Tesla stock from a Yahoo Finance stock quote page, into a Google spreadsheet

The logic: From the URL, https://finance.yahoo.com/quote/TSLA/, pull the data that is contained in the element which shows the stock price, into a Google spreadsheet, by referring to the XPath of the element that shows the stock price

The formula: The formula below, is entered in cell D6, for this example

=IMPORTXML(“https://finance.yahoo.com/quote/TSLA/”, “/html/body/div[1]/div/div/div[1]/div/div[2]/div/div/div[5]/ div/div/div/div[3]/div[1]/div/fin-streamer[1]”)

Using cell references with the IMPORTXML function

If you want, you can use cell references with the IMPORTXML function, so that your URL and XPath can be entered into spreadsheet cells… where the IMPORTXML function refers to the appropriate cells instead of having the text for URL / XPath entered directly into the formula.

For example, if you enter the website URL in cell C1, and if you enter the XPath for the element into cell D1, then your IMPORTXML formula could refer to eh URL / XPath like this:

=IMPORTXML(C1, D1)

Or you can choose to use a cell reference for just the URL, or just the XPath, like in these formulas:

=IMPORTXML(C1, “/html/body/div[1]/div/div/div[1]/div/div[2]/div/div/div[5]/ div/div/div/div[3]/div[1]/div/fin-streamer[1]”)

=IMPORTXML(“https://finance.yahoo.com/quote/TSLA/”, D1)

Something really cool that you can do with the IMPORTXML function, is to combine the website URL text with cell references, so that you can change the content of a cell to quickly change the URL in the formula.

This works well with pages that have the same structure as other pages, where you can go to a different URL and the XPath for the appropriate element stays the same… such as on the stock quote pages where the stock symbol is contained in the URL, and each page that shows a stock quote is structured the same (With the same XPath for each element).

For example in the formula below, the contents of cell B2 are appended to (combined with) the URL text “https://finance.yahoo.com/quote/”, where the stock symbol is entered into cell B2, and the full URL is formed by the first portion of the URL text being combined with the stock symbol in cell B2.

The “&” symbol (ampersand) can be used to combine strings of text and cell references, as shown in the formulas example below.

=IMPORTXML(“https://finance.yahoo.com/quote/”&B2, “/html/body/div[1]/div/div/div[1]/div/div[2]/div/div/div[5]/ div/div/div/div[3]/div[1]/div/fin-streamer[1]”)

Note that if the stock symbol “TSLA” is entered into cell B2, the formula below will give the same result as the formula above.

=IMPORTXML(“https://finance.yahoo.com/quote/TSLA”, “/html/body/div[1]/div/div/div[1]/div/div[2]/div/div/div[5]/ div/div/div/div[3]/div[1]/div/fin-streamer[1]”)

Advanced IMPORTXML Web scraping (Dealing with multiple columns & rows)

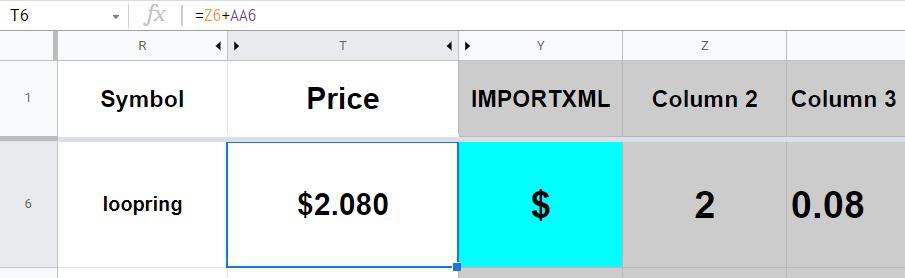

Note that some website elements will pull in multiple columns or multiple rows, from a single element. This is shown in the image below where a cryptocurrency price is being pulled in from Coinbase, and the element that is being referred to contains three columns of data. In this case the dollar sign, the dollar amount, and the number of cents, are all in separate columns, and I have had to add together cells Z6 and AA6 to get the dollar amount combined with the number of cents.

If you want / need, you can also use the INDEX function combined with the IMPORTXML function to retrieve specified columns / rows from an IMPORTXML result.

Note how in the image above the IMPORTXML result was split into three columns, but I was able to simply add together two of the cells to get the desired value (as shown below).

This content was originally created by Corey Bustos / SpreadsheetClass.com

A note on how IMPORTXML does not work on some website

Some websites do not allow the IMPORTXML formula to work / to pull information from it. If the website does not allow “bots” or use of the IMPORTXML function, your formula may simply show an error, or in some cases the formula may return a message that says it does not allow bots to crawl the website.

A note on automation and formula limits

Note that the IMPORTXML formula pulls data automatically every so often. Also note that there is a limit on how many formulas can be used / how much data can be pulled in a single spreadsheet. If your formulas say “Loading” you may have too many IMPORTXML formulas in your sheet. Google Sheets may also show a message on your formula that tells you that you have exceeded the limit. This can be fixed by reducing the number of IMPORTXML formulas, and also by simply waiting a little while. Note that if you use the formulas as I showed in the video, where you frequently change the URL, then this can also cause the limit to be met by constantly pulling new data.

Pop Quiz: Test your knowledge

Answer the questions below about the IMPORTXML formula, to refine your knowledge! Scroll to the very bottom to find the answers to the quiz.

Classroom downloads:

Get your free Google Sheets function cheat sheet

Question #1

Which of the following items are needed for the IMPORTXML function?

- Website URL

- XPath of the element

- Both

Question #2

True or False: The XPath for a website element never changes

- True

- False

Question #3

True or False: The IMPORTXML function works on all websites

- True

- False

Question #4

Which of the following is an Xpath?

- html/body/div[1]/div/div/div[1]/div/div[3]/div[2]/ div/div/div/div/div/div[13]/div/div/div/div/div/p[1]

- https://finance.yahoo.com/quote/TSLA/

Question #5

Which of the following is a URL?

- html/body/div[1]/div/div/div[1]/div/div[3]/div[2]/ div/div/div/div/div/div[13]/div/div/div/div/div/p[1]

- https://finance.yahoo.com/quote/TSLA/

Question #6

The URL and the XPath must be entered between quotation marks, in the Google Sheets IMPORTXML function

- True

- False

Answers to the questions above:

Question 1: 3

Question 2: 2

Question 3: 2

Question 4: 1

Question 5: 2

Question 6: 1